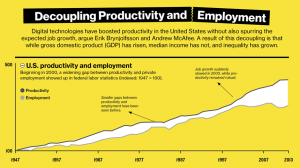

A recent MIT Technology Review reveals an alarming trend. Since the early 2000’s and increasingly since the financial crisis of 2008, the levels of employment have decreased even though productivity has increased over the same period. Erik Brynjolfsson And Andrew Mcfee call this the “great decoupling” of productivity and employment. Historical trends show that as productivity increases so too does employment and the wealth of nations. Since WWII, increases in productivity have been tracked closely by increases in job creation. However, recent figures show that something new is happening as productivity continues to increase but employment flatlines.

The culprit McAfee and Brynjofsson argue is the impressive advances in computer technology that is changing everything not only in manufacturing, clerical and retail work but also in professional services such as law, education, medicine and financial services.

For anyone working in agriculture and manufacturing, that machines, software, automation and robots can replace people may seem obvious, but today we are seeing the emergence of a new trend: The information technologies that make our jobs safer, easier and boost productivity are now also reducing the demand for many types of knowledge workers.

Although not clear cut, there is a growing pool of evidence to suggest that advances in artificial intelligence, big data analytics, storage capacity and processing speeds mean that knowledge worker jobs previously believed to be safe from machine automation are being destroyed faster than they are being created.

Since the Industrial Revolution in the 1700’s technology has significantly changed the way in which we work, the nature of work and destroyed some types of jobs in the process. In 1900, 41% of the US population worked in farming. By 2000 this figure had shrunk to 2 percent even though productivity had increased significantly. The trend is the same in manufacturing as employment dropped from 30 percent at post WWII levels to 10 percent today. Although these shifts were painful to the workers involved at the time, historical patterns do not reveal a net decrease in jobs over an extended period of time. If anything new technologies have created new jobs and new wealth even if it took years for workers to acquire the expertise needed for new types of employment

Lawrence Katz, a Harvard Economist, believes that the disruption in job creation and productivity improvements is a blip. He sees no trend of eliminating work for people believing rather that employment rates stay relatively stable over the long term, saying that “people have always been able to create new jobs. People come up with new things to do”. That is of great comfort for the longer term but what of today, what will happen to your job over the next decade or two? Even Katz agrees that something different is happening as a result of today’s new digital technologies.

The question as we look into the future is will history serve as a good guide or if technology truly disrupts enough will we see a science-fiction scenario in which automated processes and robots with superhuman skills take over huge swaths of human tasks?

There is no doubt that the impressive improvements and innovations in computing technology will eliminate some jobs, what is more likely to happen is that advances in automation and artificial intelligence will make human workers more efficient rather than replace them. I believe that Katz is correct. Human workers will never be entirely replaced by machines, but people are going to struggle through a period of disruption as they learn how to work with machines capable of doing a better job than they can do. The most successful workers will be those who understand what unique values they bring to their job, those that computers can not do, and then integrate with what machines do best in a way that enhances productivity and gains for business beyond the cost of producing these gains.

The immediate disruption for leaders and workers will be to manage the integration across business in a way that helps people realise the benefits of this technology without having to fear its repercussions. This will not be an easy task and will stretch the skills of many leaders, who themselves will have to adapt as smart machines become more ubiquitous in the lives of knowledge and professional workers. We’ve been predicting this for a long time – see our blog Your future boss may be a robot written in May 2011.

Information now moves with lightning speed and advances in speech recognition and big data analytics mean it is increasingly easier and faster for a worker to ask a computer, using even unstructured commands, to perform a task than to ask a colleague to do that task for them. For example a manager may want to undertake a competitor analysis, or an analysis of customer complaints or find new patterns in shopping and buying behaviours. Rather than approach the analytics or research team at head office and then wait several days or weeks for the report, the manager will be able to ask the “Siri” equivalent of the research department a question and the results will be delivered almost immediately. The proliferation of smart apps in business means that these kind of knowledge tasks can be performed in realtime, enhancing the customer experience and deliver immediate results massively increasing productivity.

Professional and clerical jobs appear to be most vulnerable. The days of telling your children to become a doctor, lawyer or accountant because it will ensure a job for life no longer ring true. This is because the marriage of AI, big data and voice and gesture recognition is beginning to give machines an almost humanlike ability to reason and solve many new types of problems. IBM’s Watson famed for beating human champions in the game show jeopardy is now working in the fields of medicine. Watson has analysed 605,000 pieces of medical evidence, 2 million pages of text, 25,000 training cases and 14,700 clinician hours making it, so claims are being made, significantly more accurate in its diagnoses than doctors. IBM calls this cognitive computing whereby huge amounts of computing power can quickly offer up “advise” for doctors and pharma scientist to act on. Dr Watson may not be diagnosing your illness today, as impressive as these advances are, Watson still lacks the judgements and intuition a physician often needs, but these are early days and we are not far off having our own personal dietician, nutritionalist and GP all wrapped up neatly into one app.

The jobs most vulnerable to this disruption appear to be those in the middle and as McAfee states “Many middle-class jobs are right in the bulls-eye, even high-skill work in education, medicine and law is affected”. Research by MIT economist David Autor shows that the middle class is suffering both in share of jobs and in wage growth. Advances in digital technology appear to be helping the bottom and the top ends the most and this could lead to an increase in social disruption as a high percentage of middle-class workers find themselves with out opportunities to increase there living standards. Most alarmingly this trend suggests that unless policy makers make the adjustments required to prepare workers for this new digital automated machine driven world, we are fast approaching a period of massive social disruption. The US prospered in the early 1990 because the authorities of the time made secondary education accessible to many people at a time when jobs in agriculture was drying up. New steps are again required as white collar worker jobs dry up. Educators and policy makers need to stop educating people for jobs that will no longer be relevant or even available when they graduate. Investment also needs to be made by business in helping existing workforce to learn, unlearn and relearn.

Dean, great blog entry. I read this article just before yours: http://www.huffingtonpost.com/axelle-tessandier/work-life-balance_b_3559474.html

I think this author has it right too:

QUOTE:

In a world where more and more tasks, jobs and missions will be completed by artificial intelligence, creativity will be a competitive advantage as well as a passport to success.

Analyzing and comprehending data may allow companies to understand the present, but it will never allow them to predict or define the next revolutionary idea or product. Envisioning the next breakthrough will require instinct, guts, emotion and most of all, passion.

We can all be and need to be creative. We just need to give ourselves the permission to follow our passions and fulfill our visions.

Loving what you do creates engagement. Only 30 percent of American employees feel engaged or inspired by their jobs. If you think having a meaningful job is “nice to have” or a generational quest, try imagining a world where 99 percent of us are engaged in our mission. Loving what you do is imperative to being creative and pushing the boundaries in order to invent our future.

I know some find the millennials’ obsessive quest to be passionate about their jobs, or to find a mission that resonates with their values, to be naive. But seeking work they are passionate about and finding themselves along the way is not simply a tantrum thrown by a spoiled generation. Learning about oneself is the only way to discover how to best contribute to the community. Identity is the basis of any collective involvement. Can I give my best when I am lost? Can I be passionate about what I do if I cannot project who I am in it? Can I feel empathy when I do not even love or know who I am?

….

Loving your job is no longer a luxury, and will soon be a necessity. Loving what you do and finding yourself in it is not asking too much. Instead, it must be encouraged, not only in small businesses and innovative companies, but in all corporate structures. Creativity and productivity are not forced into existence, they come from loving what you do.

There was a time where work and life were conceptualized as two different things. This absurd time is over. Work is not a box we need to fit in. Work, as part of your life, is also your meaning. I am not scared by this merge because I have never really understood the notion of work-life balance. When you are not engaged in what you do and it’s killing you inside, simply leaving the office at 5 p.m. won’t fix the problem. Loving what you do means respecting who you are and your internal resources. Not burning them even if its only from 9 to 5.

Loving what you do is freedom, the key value of a digital generation empowered by access to the world.